Las Vegas — The 2026 Consumer Electronics Show is kicking off with a bold message: AI infrastructure is stepping up a gear. Nvidia kicked off the week ahead of the official show by revealing the Vera Rubin architecture, a new generation of AI compute designed to dramatically accelerate workloads and scale up to meet surging demand for AI across the cloud and enterprise.

Nvidia: Vera Rubin is Production-Ready and Faster Than Blackwell

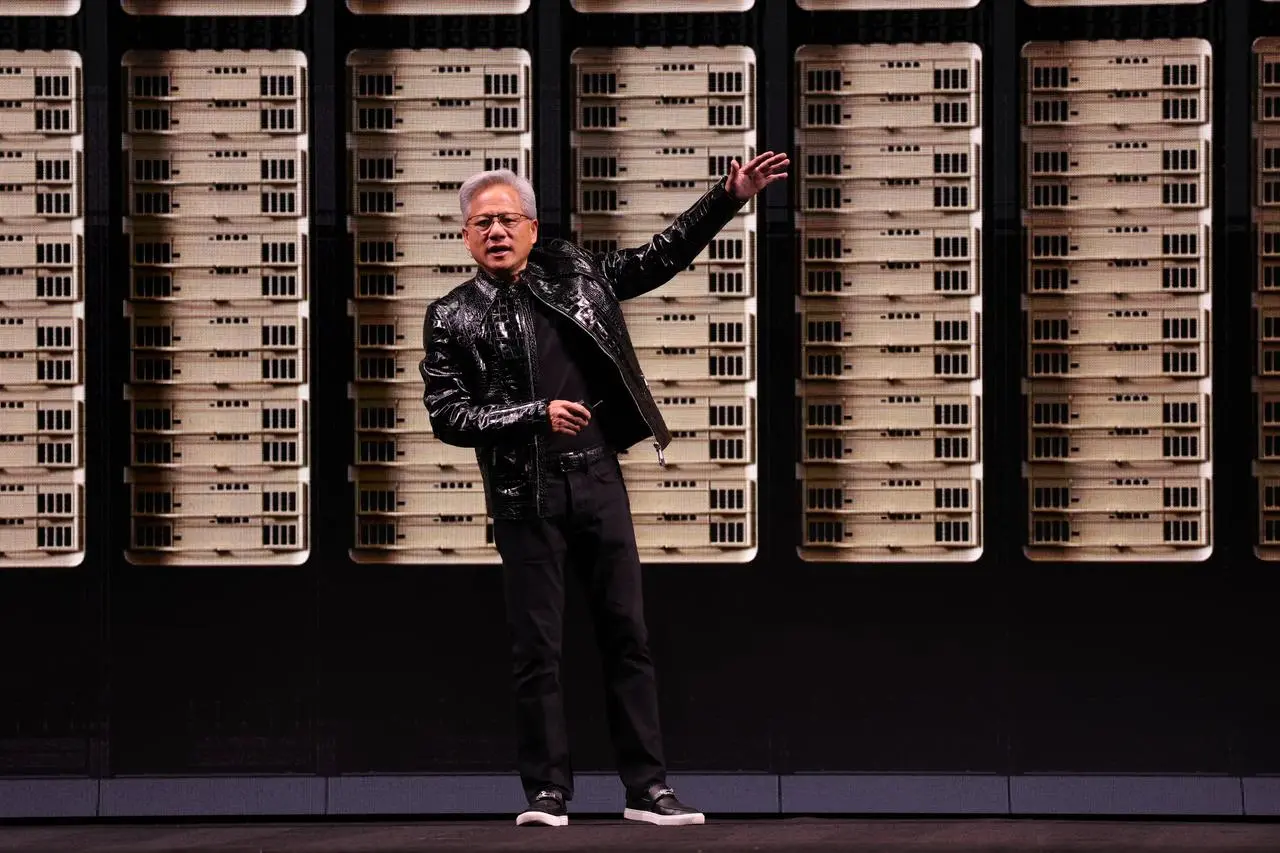

Nvidia CEO Jensen Huang formally announced Vera Rubin ahead of the CES keynote, saying the architecture is now in production and expected to ramp up volume in the second half of 2026. Rubin follows last year’s blockbuster Blackwell chip, as demand for AI infrastructure continues to surge.

In a briefing before Huang’s CES keynote, Nvidia’s HPC and AI infrastructure head, Dion Harris, described Vera Rubin as “six chips that make one AI supercomputer.” Huang framed Rubin as a response to the explosive compute needs of modern AI, noting that the platform delivers a meaningful leap over Blackwell in both speed and efficiency.

Key performance highlights include:

- More than triple the speed of Blackwell for AI workloads.

- Inference performance projected to be about five times faster than Blackwell for select tasks.

- Substantially higher inference compute per watt, underscoring efficiency gains as models grow larger and more capable.

Rubin architecture was first announced in 2024 and is now positioned to gradually replace Blackwell in Nvidia’s lineup. Nvidia emphasized that Rubin is designed to handle more complex, agent-style AI workloads and to support expanded networking and data movement across large-scale deployments.

Rubin systems are already slated for deployment across major cloud platforms and AI providers. Nvidia listed Amazon Web Services, OpenAI, and Anthropic among the expected users, with the Lawrence Berkeley National Laboratory’s upcoming Doudna system also set to run on the new platform.

A Record-Breaking Year for Nvidia Data Centers

Nvidia’s hardware cycle is riding high on a wave of demand for AI infrastructure. The company reported a record year for data center revenue, up about 66% year over year, driven by sustained demand for Blackwell and Blackwell Ultra GPUs. Nvidia executives projected continued strength as more organizations scale their AI workloads from experimentation to production.

Huang estimated that global spending on AI infrastructure could reach between $3 trillion and $4 trillion over the next five years, underscoring the blockbuster opportunity for hardware providers at CES and beyond.

What This Means for AI and Tech Markets

Rubin’s accelerated rollout signals the industry’s readiness to move from AI experimentation to large-scale deployment. For cloud providers and enterprise buyers, Rubin promises a step-change in compute efficiency and throughput, potentially lowering the total cost of running massive AI models and accelerating time-to-insight for a wide range of applications—from healthcare and finance to manufacturing and consumer electronics.

As the duo of AI demand and cloud-scale workloads continues to grow, Rubin’s success—or any supply-chain bottlenecks—will likely set the tone for AI hardware pricing and availability in the second half of 2026 and into 2027.